Avidclan technologies Webseite

Avidclan Technologies

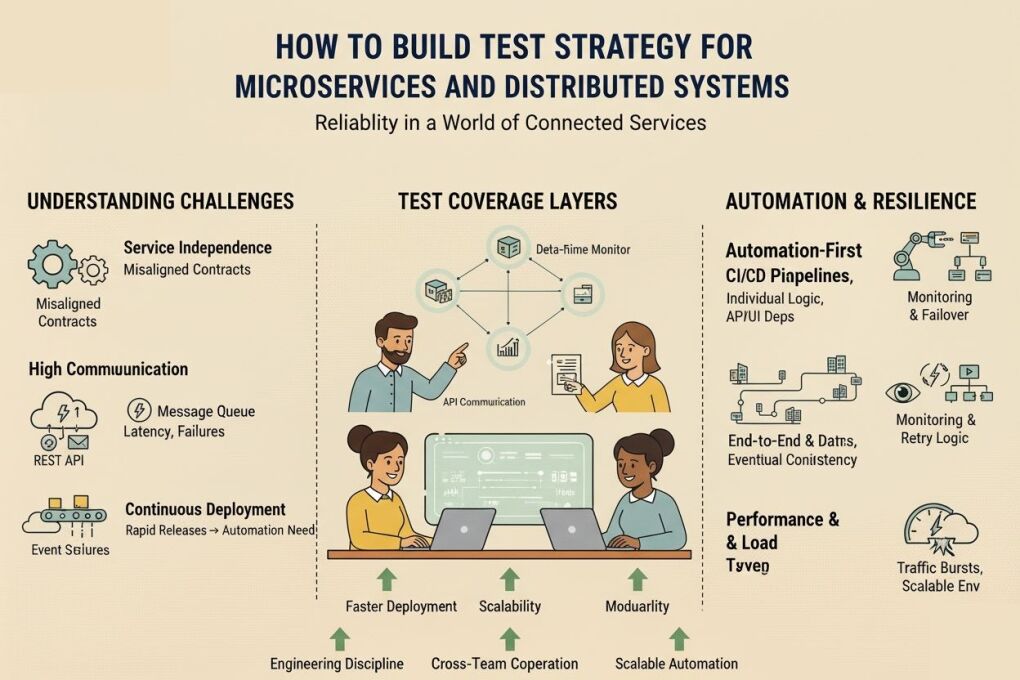

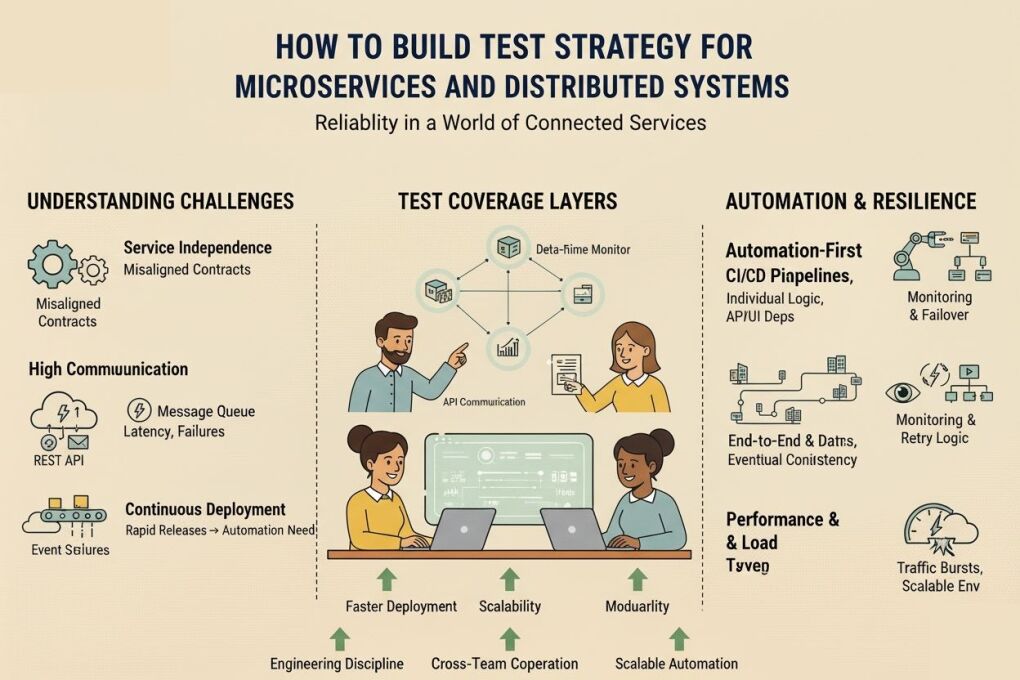

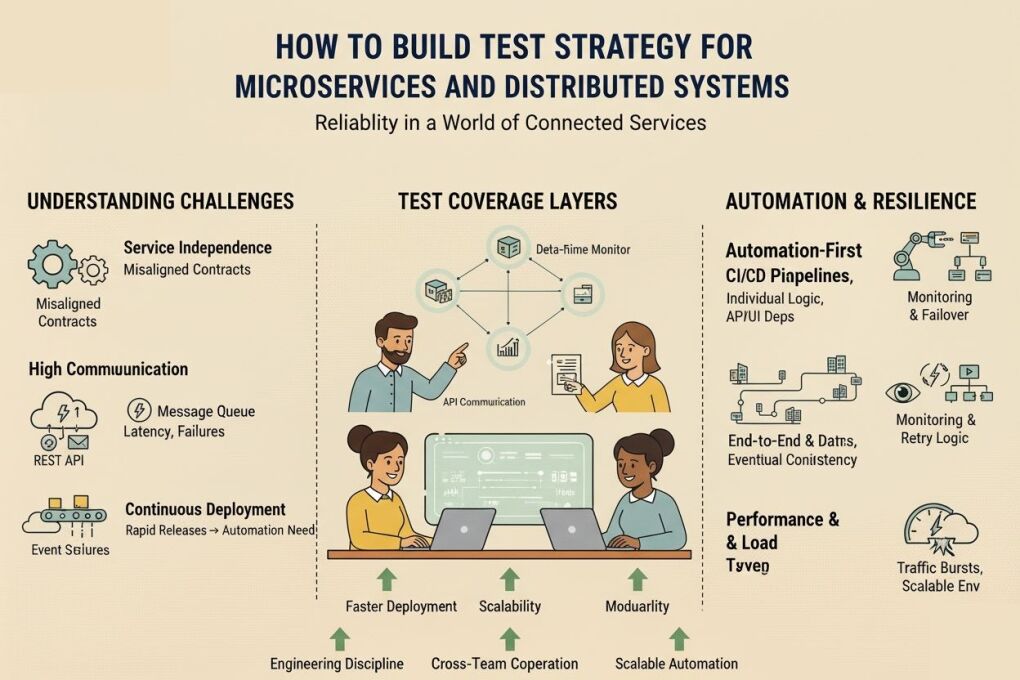

The software architectures of the modern world are changing from monolithic applications to microservices and distributed ecosystems. Such transformation enables the organizations to be able to deploy faster, scale independently, and develop with modularity across business functions. Nevertheless, testing has become complicated due to the fact that systems are no longer single entities. A variety of independently deployed and loosely coupled services are to interact perfectly with APIs, messaging layers, workflow events, and cloud infrastructure.

Quality assurance in microservices environments should have a customized methodology that will consider reliability, communication dependencies, service contracts, data synchronization, performance, and resilience to load in a real-world environment. The implementation of the correct test strategy requires the adoption of automation, observability, environment orchestration, and failure simulation so that there is consistent behavior of the system between distributed components.

Understanding Testing Challenges in Microservices Architecture

Service Independence and Shared Responsibility

Service Independence and Shared Responsibility

Each service has its own logic, data storage, deployments, and teams. While independence accelerates development, it also increases the risk of misaligned communication contracts. Services may function individually but fail when integrated. Teams need a robust quality framework that validates integration flows rather than only unit-level logic.

High Number of Communication Points

Microservices interact through REST APIs, gRPC, message queues, and event streams. These cause situations like network latencies, partial failures, payload mismatch, and idempotency errors. Realistic testing must replicate service discovery, distributed communication, and cloud orchestration behaviors.

Continuous deployment pressure

Rapid releases mean testing must keep pace with development velocity. This shifts traditional scripting and framework setup toward modern automation capabilities, including professional API automation testing services that support scalable test coverage across CI/CD pipelines.

Defining Test Coverage Layers for Distributed Systems

Unit and Component Testing

Unit and Component Testing

Individual service logic must be validated independently with mock dependencies and simulated data. Since microservices are owned by isolated teams, proper versioning and contract mapping are essential to avoid downstream issues.

Contract and integration testing

Testing communication formats ensures that producers and consumers remain compatible even when deployed separately. Schema-based contracts, consumer-driven pact testing, and automated validation workflows ensure stable interaction patterns, commonly supported through enterprise-grade microservices testing services for distributed validation.

End-to-End and Data Consistency Validation

End-to-end scenarios verify business outcomes across multiple services. In distributed ecosystems, data consistency is maintained using eventual consistency models, event sourcing, and distributed transactions. Teams must test real-world outcomes, not only internal interactions, using environmental replicas whenever possible.

Automation, Tooling, and Resilience Testing

Automation-First Testing Model

Automation-First Testing Model

Microservices require continuous validation across updates and dependency changes. Teams rely on automation frameworks designed for distributed orchestration and real-time execution pipelines. Organizations often collaborate with specialists in areas such as Selenium automation testing services when UI-level validation is required on top of service automation.

Where high-scale test engineering is essential, teams may seek dedicated expertise through partnerships that enable professional delivery, similar to engagement models where companies hire QA engineers for long-term automation maturity.

Monitoring, observability, and failover

Microservices interact through REST APIs, gRPC, message queues, and event streams. These cause situations like network latencies, partial failures, payload mismatches, and idempotency errors. Testers should analyze latency, retry logic, timeout strategies, and fallback mechanisms across services.

Performance and Load Evaluation

Testing must account for unpredictable traffic burst patterns and container lifecycle behaviors. Scalable cloud test environments, autoscaling metrics, and network-level resilience are essential for production-grade confidence.

Team Model, Collaboration, and Skill Structure

Testing Skill Distribution

Testing Skill Distribution

A microservices testing team requires multiple competencies, including scripting, API validation, performance testing, DevOps automation, and containerization. Many organizations strengthen internal capability through support models such as hiring test automation experts for specialized delivery or integration into DevOps testing pipelines.

For UI automation within the microservices ecosystem, teams may extend framework development using engineering expertise similar to collaboration models where companies hire Selenium developers to build reusable automation layers for modular components.

For globally distributed delivery, enterprises may engage remote professionals based on flexible operating models, as seen when they hire remote developers for continuous development and maintenance cycles.

Centralized Quality Governance

While microservices promote decentralization, testing governance should not be fragmented. Shared standards, documentation templates, versioning rules, and observability frameworks allow consistency across distributed systems.

Organizations that require wider scope testing, including integration and regression, rely on structured capability models similar to professional Selenium testing services for web automation management aligned to domain-specific test maturity levels.

Conclusion

A strong test strategy in microservices and distributed systems cannot be built using traditional QA planning. It requires multi-layer validation, contract-based testing, service-level observability, fault-tolerance testing, and automation to be built into DevOps pipelines. The most reliable model of testing is a hybrid approach of testing individual components and business processes.

The success of microservices testing relies on engineering discipline, cross-team cooperation, and scalable automation. Enterprise teams, which match testing structures to architectural objectives, deliver products faster, have more robust systems, and have less downtime. In scenarios where internal capabilities require additional engineering depth, organizations may collaborate with service-driven partners offering enterprise solutions such as Selenium testing services aligned to digital product delivery.

The metaverse is a paradigm-altering transformation in digital interaction, as virtual and real worlds intersect and form an immersive experience that interests global users. With more companies tapping into the virtual world, the need to have qualified developers who can create sophisticated 3D applications is skyrocketing every day. React Three.js has become the potent duo in designing compelling metaverse experiences with an outstanding level of performance and user satisfaction.

Why React Three.js is a Metaverse Dream

React Three Fiber (RTF) changes the historically difficult environment of creating 3D to one simpler, adding the familiar React component system to Three.js graphics programming. The revolutionary method enables the developer to develop virtual worlds with intuitive patterns using React and enjoy the power of 3D rendering. The declarative character of React Three Fiber eases the handling of complicated 3D scenes and keeps the codebases scalable.

Creating 3D experiences in the past was a complex and time-consuming process that needed years of experience in complex graphics programming in order to achieve simple geometric shapes. React Three Fiber breaks down all these barriers through the provision of a component-based solution that is natural in the hands of web developers. By recruiting React.js developers proficient in Three.js, the companies enter the world of professionals who are familiar with the contemporary web development methods and the latest 3D graphics programming.

The framework provides complex tools for complex managing 3D implementations such as advanced lighting systems, realistic shadow rendering, and smooth animations. These features allow the developers to develop visually impressive virtual environments that can compete with the native apps, in addition to being cross-platform compatible.

The Fundamentals of Getting Started: Building Blocks

How to configure the first metaverse project

The basis of successful metaverse projects is the development of a solid development environment. The way modern developers work is that they start with a React application with the help of such tools as Vite.js, which offer them a faster build process and better development experiences. The process of setting up follows the installation of Three.js libraries, setting up configuration scene parameters, camera localization, and setting up lighting systems.

The first learning curve is not as difficult as it might seem, especially to developers who have been involved in React development patterns. A few hours after installation, developers will be able to display the first 3D scenes with interactive objects and dynamic light. Creating a sound environment with adequate optimization of performance and scalability becomes possible with the help of professional ReactJS development services as soon as the project is started.

The development process resembles that of the conventional React app, but there are 3D objects, lights, and cameras instead of buttons and forms. This well-known framework will speed up the process of learning and decrease much time spent to become productive in metaverse development.

Generation of Worlds That Are Alive

3D scenes are not interactive, and thus, 3D may be a starting point, but real metaverse experiences must be interactive and responsive. The users are likely to click, drag, navigate, and feel as if they are in the virtual space. These interactive features turn passive watching experiences into active exploration and interaction.

Effective metaverse applications have many types of interaction associated with them, including a simple mouse click to elaborate gesture recognition. The developers can use gaze-based interactions, controller capabilities, and touch-responsive features to develop a friendly user interface. Studies show that the rate of active users on well-designed interactive applications is much higher than on static ones.

The trick of developing virtual interesting worlds is related to the realization of user behavioral patterns and the development of interaction that may be perceived as natural and responsive. Together with the user experience design principles, professional development teams are the best at designing these experiences with technical knowledge.

Performance Optimization: How to Make It Quick and Fluid

The Needed Performance Strategies

Optimization of performance is one of the essential aspects of the success of metaverse applications, where users require responsive user experiences on multiple devices and platforms. Three.js has advanced rendering methods, such as frustum culling, which restricts rendering to objects visible in the scene, and Level of Detail (LOD), which scales down the complexity of objects depending on the distance to the camera.

These optimization methods would save a lot of computation and also would not compromise the quality of the visualization. Sophisticated resource management techniques such as lazy loading, support of compressed textures, and speedy model loading systems are also present. A trustworthy React.js development company carries out these optimizations in a methodical way so as to guarantee steady performance in various hardware settings.

Memory management is especially significant where there are many users and interactive environments in a complex virtual setting. Good garbage collection, texture pooling, and management of the scene graph can ensure stable performance even in long usage sessions.

Cross-platform compatible solutions

The contemporary metaverse solutions should operate without any issues on a variety of devices, including expensive VR headsets, smartphones, and cheap laptops. This need to be accessible extends the reach of the audience and the rate of adoption by the users. The developers should apply responsive design, especially to the 3D settings.

Even 3D applications can be developed using the mobile-first strategy: begin with well-optimized models and effects on devices with lower capability, and proceed to increasingly impressive experiences on the capability-rich devices. The approach guarantees maximum compatibility with all devices and high performance on high-end products.

During development cycles, testing on many platforms and devices will be necessary. Teams of professionals in the field have device testing labs to ensure testing of the performance and functionality of the whole scope of intended hardware setups.

Social Integration and Sophisticated Functionalities

Development of Social and Collaborative Aspects

Social functionality takes the isolated 3D space to a whole new world of a community where people can interact with each other, collaborate, and establish relationships. React Three Fiber has the ability to enable real-time multiplayer systems, bespoke avatar systems, and social interaction elements to create meaningful connections in virtual space.

Social features implementation involves close attention to the networking protocols, user authentication systems, and the synchronization of the data in real-time. All these technical problems are easily overcome with the help of suitable architecture planning and a skilled development team that is familiar with 3D graphics as well as networking technology.

Effective social metaverse apps involve voice chat, text messaging, recognition of gestures, and collaboration tools through which people can collaborate on a project or just socialize in virtual worlds. Such characteristics contribute a lot to the user retention and engagement rates.

External connectivity and API integration

Metaverse applications are not normally standalone, and they need to be connected to outside services, databases, and business systems. Such associations make possible such capabilities as user authentication, content management, e-commerce capabilities, and real-time data visualization in 3D spaces.

Services of professional ReactJS web development companies are the best place to get help with an API integration that is without a problem and ensures the security of the application and its performance. The process of integration is a complex activity that presupposes the thoughtful planning of data transferring, caching, and error management to provide a smooth connection between virtual environments and the external systems.

Contemporary metaverse applications tend to be characterized by blockchain-based technology, non-fungible token (NFT) marketplaces, cryptocurrency payments, and decentralized storage. Such integrations involve expertise in Web3 technologies as well as in the usual web development processes.

Virtual Reality Support and WebXR Support protocols.

The WebXR integration of React Three Fiber allows developers to develop immersive VR experiences that work natively in web browsers and do not require any independent native applications. This feature makes it much easier to enter the system as a user and offers complete support for VR on all available devices.

The framework is compatible with multiple VR headsets and input devices, which introduces virtual experiences to the general audience with no need to install particular hardware and software. The support of WebXR is still growing as browser developers and hardware companies implement standard solutions.

Metaverse applications that are powered by VR provide a new level of immersion where users can experience being physically in virtual places. The technology is mainly useful in training, virtual conferences, and entertainment settings that are enhanced by the use of space.

Search Engine Optimization and Accessibility

SEO of the 3D Content

When developing metaverse applications intended to be discoverable, one should consider best SEO practices and pay special attention to designing web-based experiences. The developers should adopt server-side rendering (SSR) technologies that will help search engines to index 3D content appropriately and learn about the structure of applications.

As a result, the correct use of meta tags, markup of structured data, and semantic URLs becomes important to search engine exposure. These are some of the technical SEO features that can assist search engines to know and classify metaverse content, enhancing organic discovery and acquisition of users.

Optimization techniques of 3D applications are not the same as those of regular web pages, and they will need special techniques to describe a virtual world, interactive features, and user experience in such a way that search engines can understand and index them well.

Constructing Inclusive Virtual Experiences

The issue of accessibility in a 3D environment is quite different and demands consideration of design and implementation approaches. VR headsets cannot be used by every user, and high-end graphics hardware is not available to all, so alternative interaction schemes and progressive enhancement are vital to inclusive design.

Effective accessibility practices are the provision of means of navigating with a keyboard, compatibility with screen readers, provision of alternative text to 3D objects, and adaptable performance settings, which meet different hardware capabilities. These characteristics make the metaverse experiences compatible with the various levels of ability and technical limits.

The focus on accessibility is given by professional development teams already at the start of the project when the universal design principles are used, which are beneficial to all users and comply with the requirements of users with disabilities.

Conclusion: Creation of the Future of Digital Contact

React Three.js is a breakthrough technology that allows creating metaverse experiences on the web and has the openness of web development and the capabilities of complex 3D graphics. The component-based architecture, optimizations of its performance, and a large community are the characteristics that make the framework an optimal starting point for ambitious virtual reality projects.

The metaverse environment is changing fast, and emerging technologies, standards, and expectations of users keep surfacing. Collaborating with qualified ReactJS development services will be even more beneficial to the business that is interested in making a significant presence in the virtual world without undergoing the technicalities as well as market dynamics.

Technical skills are not enough for the development of the metaverse. The principles of user experience design, performance optimization, cross-platform compatibility, and accessibility standards may be of great help in designing virtual worlds that will interest the user and bring value over time.

The combination of the known React development patterns with the potent 3D assets of Three.js offers developers an array of tools previously unseen to develop the future of digital experiences. With the merging of the virtual and the physical world, the possibilities of innovation and creativity in developing the metaverse are virtually unlimited.

The future is with developers and companies that accept these new technologies and focus on the needs of users, accessibility, and performance. It is React Three.js that provides an ideal basis on which to construct that future, experience by experience.

With testing tools appearing and disappearing as fast as fashion trends, the technological sector is quickly changing. Selenium, though? In technological years, this veteran automation tool has lingered for what feels like an eternity. As we enter 2025, many people are wondering if Selenium is still worth the investment or if it will end up in the digital landfill.

Let's get past the noise and discover Selenium's true status in 2025.

Selenium's Staying Power: Why It's Not Going Anywhere

First of all, selenium usage is not likely to stop anytime soon. According to recent market reports, the automation testing market is blowing up - expected to hit a whopping $52.7 billion by 2027. And guess what? Selenium is still grabbing a massive chunk of that pie.

Why? This is primarily due to its effectiveness. The underlying technology of Selenium is incredibly robust. Furthermore, numerous companies employ senior engineers who have transitioned into managerial positions and possess extensive knowledge of Selenium. When they need to set up testing, they naturally lean toward what they already know and trust.

Think about it—even a decade after Chrome and Firefox took over, companies were still supporting Internet Explorer 6 because of customer requirements. Particularly if tech is intimately integrated into corporate operations, it does not just vanish overnight.

What's New with Selenium in 2025?

Since its release, Selenium 4 has revolutionized the industry. It's packed with improvements that have kept it relevant in 2025:

- Better Docker support

- Improved windows and tab management

- Native Chromium driver for Microsoft Edge

- Relative locators that make finding elements way easier

- Better formatted commands for Selenium Grid

These upgrades aren't simply small changes; they're big enhancements that fix a lot of the problems developers encountered with prior versions. Selenium developers now have access to a considerably more powerful and versatile tool than they had only a few years ago.

The AI Factor: How Selenium Is Embracing Smart Testing

One of the coolest trends in 2025 is how services for Selenium automation testing are incorporating AI and machine learning. This isn't just buzzword bingo—it's making a real difference in how testing works.

Self-healing test automation is the perfect example. We've all been there: you write a perfect Selenium script, and then some developer changes an element ID, and suddenly your whole test suite breaks. Super annoying, right?

Now, AI-powered Selenium testing can actually identify alternative elements on the page when the expected one isn't found. The tests literally fix themselves at runtime. This advantage is huge for reducing maintenance headaches, which has always been one of Selenium's weak spots.

Companies that offer selenium testing services are increasingly adding these AI capabilities to their toolkits, making tests more reliable and requiring less babysitting.

Cloud and Distributed Testing: Testing at a Whole New Scale

There will be a big change in how Selenium is used in distributed cloud systems in 2025. As the number of browsers, devices, and operating systems we need to test on grows, the old way of running Selenium Grid on local PCs doesn't work anymore.

Cloud-based Selenium testing is now standard, and distributed cloud designs have fixed the problems with latency that used to make remote testing hard. When you hire remote Selenium developers today, they're likely setting up tests that run simultaneously across multiple geographic locations, giving you faster results and better coverage.

This distributed approach means companies can run thousands of tests in parallel, cutting down testing time from hours to minutes. This significantly transforms CI/CD pipelines, where speed is crucial.

Selenium vs. Newer Competitors: The Testing Showdown

It's important to acknowledge that tools such as Playwright are rapidly gaining traction. Microsoft's backing has given Playwright some impressive capabilities, especially for handling single-page applications and flaky tests.

But here's the thing: Selenium isn't going away. The market is not characterized by a dominant player. By 2025, we're seeing a hybrid approach where companies use

- Selenium for their established, stable test suites

- Newer tools like Playwright are designed for specific use cases where they excel

- AI-powered tools to complement both approaches

When you hire Selenium developers in 2025, you're often getting professionals who understand this ecosystem approach. They know when to use Selenium and when another tool might be better for a specific testing challenge.

The Job Market: Skills That Pay the Bills

If you're wondering about career prospects, the news is positive. Selenium developer jobs are still in high demand, with average annual salaries in the US ranging from $62,509 to $100,971. The automation testing market growth means opportunities for Selenium experts aren't drying up anytime soon.

What has changed is the skill set that employers look for when they hire remote Selenium developers. It's no longer enough to just know the basics. In 2025, the most in-demand professionals skilled in Selenium possess the following abilities:

- AI-augmented Selenium testing

- Cloud-based and distributed test architectures

- Integration with DevOps and CI/CD pipelines

- Performance optimization for large test suites

- Security testing automation

These specialized skills ensure your resume matches what recruiters are searching for in 2025.

The Cost Factor: Why Selenium's Price Tag Matters

One giant reason Selenium remains relevant in 2025? It's still free. Under the Apache 2.0 open-source license, all the core components—WebDriver, IDE, and Grid—cost exactly zero dollars.

This is especially important in a commercial environment where every budget is looked at closely. There are ancillary expenses like infrastructure, training, and maintenance, but Selenium is a great deal compared to other commercial options.

Because of this cost advantage, selenium automation testing services may provide low prices and yet get good results. This ease of access is a big selling feature, especially for small firms and startups.

Real Talk: Selenium's Challenges in 2025

Let's be honest—Selenium isn't perfect. Some challenges persist even in 2025:

- Test flakiness can still be an issue, especially with complex UIs

- Setup and configuration have a learning curve

- Maintenance requires ongoing attention

- Performance can lag behind newer tools in some scenarios

The community knows about these problems and is working to fix them, nevertheless. When you employ Selenium engineers who actually know what they're doing, they provide solutions and best practices that help avoid these problems.

Plus, the massive community support means that when problems do arise, solutions are usually just a Google search away. Try finding that level of support for a brand-new testing tool!

The Verdict: Is Selenium Worth It in 2025?

So what's the bottom line? Is Selenium still worth investing in for 2025 and beyond?

For most companies, the answer is yes, with some caveats. Selenium remains a solid choice if:

- You need a battle-tested solution with massive community support

- Cost is a big deal in your decision-making

- Your team already knows Selenium inside and out

- You're testing traditional web applications

- You want flexibility across different programming languages

In 2025, the most intelligent strategy is to strategically incorporate Selenium into a larger testing toolkit rather than relying solely on it. When you hire remote Selenium developers or partner with Selenium testing services, look for those who get this nuanced approach.

Looking Ahead: Selenium Beyond 2025

What's next for Selenium beyond 2025? While nobody can predict tech futures with 100% accuracy, a few trends seem pretty clear:

- Selenium will keep evolving, with AI integration becoming deeper and more seamless

- The ecosystem of complementary tools and frameworks will grow

- Cloud-based and distributed testing will become even more sophisticated

- The community will continue driving innovations that keep Selenium relevant

Industry experts are saying Selenium's market share will probably decrease but stabilize around 50% over the next five years, eventually finding a long-term place in the 5-20% range over the next decade. That's not Selenium dying—it's just settling into a specialized tool with a dedicated user base.

Wrapping Up

Though it still has great worth, Selenium may not be quite as well-known in 2025 as it once was. Like COBOL programmers who still find employment decades after that language peaked, Selenium abilities remain useful even as the environment changes. If you want to be open to new ideas, it's best to have Selenium in your testing toolset. Selenium still deserves a place at the table, whether you want to employ engineers, work with Selenium testing services, or build your own testing capabilities in-house.

In 2025, testing isn't about finding the one best tool; it's about putting together the proper combination of technologies to fulfill your objectives.

The first time I walked into Janet's boutique, inventory sheets were scattered across her desk, three different POS systems were running simultaneously, and she looked like she hadn't slept in days. "I spend more time managing systems than managing my business," she confessed. Six months later, after implementing a custom .NET Core retail management solution, Janet's store was running like clockwork—inventory accurate to the item, staff focused on customers instead of paperwork, and Janet was finally taking weekends off.

This transformation isn't unique. I've seen it happen repeatedly across retail businesses of all sizes. Let me share what makes these systems work and how .NET Core has become my go-to framework for retail solutions that actually deliver results.

Why .NET Core Changes the Game for Retailers

Think about what retailers really need: reliability during holiday rushes, security for customer data, and systems that talk to each other. This is where .NET Core truly shines.

Working with an ASP.NET development company gives you access to cross-platform capabilities that traditional frameworks can't match. One sporting goods chain I worked with runs their warehouse operations on Linux servers while their in-store systems use Windows—.NET Core handles both environments seamlessly.

The performance benefits are measurable, too. When Black Friday hits and transaction volumes spike 500%, a well-architected system keeps processing sales without breaking a sweat. I've seen checkout times decrease by 40% after migration to optimized .NET Core solutions.

The Technical Edge That Matters

"But what makes it better than what I'm using now?" That's what Alex, a hardware store owner, asked me when we first discussed modernizing his systems.

The answer lies in the architecture. Modern ASP.NET Core development employs:

- Microservices that allow individual components to scale independently

- Robust caching mechanisms that reduce database load during peak times

- Containerization for consistent deployment across environments

- Built-in dependency injection for more maintainable, testable code

These aren't just technical buzzwords—they translate directly to business benefits. When Alex's store launched a major promotion that went viral, his new system handled the unexpected traffic surge without missing a beat.

The Building Blocks of Retail Success

Inventory Management: Where Most Systems Fail

Remember Janet from earlier? Her biggest pain point was inventory management, and she's not alone. Studies show that retailers typically have only 63% inventory accuracy without proper systems.

Through ASP.NET web development, we built an inventory module that delivered:

- Real-time visibility across her three locations

- Automated reordering based on customizable thresholds

- Barcode scanning integration with mobile devices

- Trend analysis to predict seasonal demands

The technical implementation included a distributed database architecture with local caching for performance and conflict resolution protocols for simultaneous updates.

"I finally trust my numbers," Janet told me three weeks after implementation. "Do you know how valuable that peace of mind is?"

Point-of-Sale Integration That Makes Sense

Marcus runs a busy electronics store where checkout delays mean lost sales. When we decided to hire ASP.NET developer specialists, our primary goal was to create a POS system that would never keep customers waiting.

The solution included:

- Offline processing capabilities for uninterrupted operation

- Customer-facing displays showing transaction details in real-time

- Integrated payment processing with tokenization for security

- One-touch access to customer purchase history for personalized service

The technical magic happens in the seamless communication between the POS and inventory systems. Each sale triggers inventory adjustments, reordering workflows, and customer profile updates—all within milliseconds.

"Our average transaction time dropped from 4.2 minutes to just under 2 minutes," Marcus reported. "That's like adding another register during rush times."

Customer Analytics That Retailers Actually Use

"I know we have the data, but I can't do anything with it!" This was Carmen's frustration with her bakery chain's existing system. The solution came when we helped her hire ASP.NET MVC developer experts who understood both data architecture and retail operations.

The team created:

- Visual dashboards showing product performance by location, time, and customer segment

- Predictive modeling for daily production planning

- Customer cohort analysis identifying high-value segments

- Promotion effectiveness tracking with direct ROI calculations

The technical implementation involved a data warehouse with ETL processes that transformed transactional data into decision-support information.

"Now I know exactly which products to promote in which locations and to which customers," Carmen said. "Our targeted email campaigns have a 34% higher conversion rate than our previous generic ones."

Implementation Realities: Beyond the Code

The Migration Challenge

Let's be honest—switching systems is scary. When we worked with a regional pharmacy chain to hire ASP.NET programmer talent, their biggest concern was data migration.

"We can't afford to lose a single prescription record or customer profile," the owner emphasized.

Our approach involved:

- A comprehensive data audit identifying inconsistencies and corruption

- Parallel systems operation during a two-week verification phase

- Automated validation tools comparing outcomes between systems

- Staff verification of randomly selected records for human quality control

- Incremental cutover by department rather than a "big bang" approach

This methodical process took longer but eliminated the risks that keep retail owners up at night.

The Human Element: Training That Works

The best system in the world fails if people don't use it. When working with a hired, dedicated ASP.NET developer team, I always insist on comprehensive training programs.

For a furniture retailer with high staff turnover, we created:

- Role-based video tutorials under 3 minutes each

- Interactive practice environments mirroring the production system

- Laminated quick-reference guides for common tasks

- A "super user" program that trained internal champions

- Weekly "tip sessions" during the first month post-launch

"The difference was immediate," the operations manager told me. "Previous technology rollouts were met with resistance. This one had staff asking when they could start using the new features."

Technical Depth Without the Jargon

Architecture That Grows With Your Business

One bookstore I worked with started with three locations and expanded to 15 within two years. Their success was partly due to their scalable system architecture developed by ASP.NET development services experts.

The technical implementation included:

- Distributed database architecture with central synchronization

- Load-balanced web services handling request spikes

- Containerized microservices allow for independent scaling

- Automated deployment pipelines for consistent updates across locations

These sound technical, but they translate to business benefits: new stores can be set up in days rather than weeks, busy shopping seasons don't crash the system, and updates happen smoothly without disrupting operations.

Security By Design

"After that major retailer's data breach made the news, I realized we were vulnerable too," admitted Tony, who runs a chain of sporting goods stores.

Working with custom ASP.NET development services, we implemented a security-first approach:

- End-to-end encryption for all customer data

- Tokenization of payment information

- Role-based access control with strict least-privilege principles

- Regular automated security scanning and penetration testing

- Comprehensive audit logging and monitoring

The technical implementation followed OWASP security standards and PCI DSS compliance requirements—but what mattered to Tony was simpler: "I don't worry about being the next security breach headline."

The Evolution Never Stops

Retail technology doesn't stand still, and neither should your management system. The beauty of a well-architected .NET Core solution is its adaptability.

A home goods retailer I work with schedules quarterly enhancement sprints where we evaluate new technologies and customer feedback. Recent additions included:

- Mobile inventory counting with image recognition

- Customer-facing product locator kiosks

- AR-enabled mobile app for visualizing products in customers' homes

- AI-powered demand forecasting to optimize purchasing

These enhancements weren't part of the original system but were easily integrated thanks to the extensible architecture of their .NET Core foundation.

Conclusion: It's About Business, Not Just Technology

Janet, whose story opened this article, summed it up best: "I didn't invest in technology—I invested in transforming how we do business."

That's the mindset that leads to successful retail management systems. The technology stack matters, and .NET Core offers significant advantages for retail applications. But equally important is working with development partners who understand retail operations and can translate business needs into technical solutions.

The retailers who gain the most competitive advantage don't just digitize their existing processes—they reimagine what's possible when technology removes the limitations they've always accepted as "just part of doing business."

What retail challenges are holding your business back? The solution might be more accessible than you think.

Industry 4.0, more commonly referred to as the fourth industrial revolution, is revolutionizing how manufacturers and logistics firms run. From predictive maintenance to real-time monitoring, IoT is driving this change.

And here is the bigger question: Where does .NET fit in all this?

.NET has subtly risen to become among the best platforms for creating scalable, dependable, and safe Industry 4.0 applications, given its cross-platform reach, cloud integration potential, and strong development tools.

We will examine in this article how .NET facilitates smart device connectivity, what tools are offered for IoT integration, and how companies might use it to create smarter operations.

Why .NET Is a Strong Choice for Industry 4.0 Applications

.NET is no longer restricted to desktop and web applications. Particularly with .NET 6, 7, and 8, the current .NET platform has developed to support real-time, edge, and cloud-based workloads; Therefore, it is a great choice for IoT and smart factory projects.

Here is why:

- Cross-platform development: Create applications for embedded devices, Windows, and Linux.

- Scalability: .NET can manage high-throughput systems, whether they are one device or one million.

- Cloud-native: easily combine on-site services with Azure IoT Hub and AWS IoT Core.

- By design, Secure transfer of data and device authentication relies on embedded security methods.

All driven by .NET-backed IoT infrastructure, many companies collaborating with a .NET development firm are currently adding data collection, automation, and smart alerts to their applications.

How .NET Links Smart Devices and Internet of Things Hardware

Connecting with Internet of Things (IoT) devices often entails dealing with embedded systems and low-level hardware protocols. That might not seem like .NET turf; However, things have evolved.

.NET + IoT Hardware = A Powerful Combo

You can use .NET IoT Libraries:

- On devices like Raspberry Pi, communicate directly with GPIO pins, SPI, I²C, and PWM.

- Link embedded elements, including motors, sensors, and many others.

- Write tidy, manageable device drivers in C.

Developers may control physical hardware using current programming paradigms—no need to switch to C or Python unless you want to.

.NET's support for edge computing is developing as well; frameworks like Azure IoT Edge let you deploy .NET applications straight to gateways and edge devices closer to the data source.

For businesses offering .NET Core development services , this means you can deliver full-stack development from edge sensors to cloud dashboards in a single technology stack.

.NET and Azure IoT Real-Time Data Processing

Data collection is insufficient in Industry 4.0. To obtain insights and propel automation, you must process it in real time.

.NET's Azure IoT service integration makes this possible:

- Secure two-way communication between devices and cloud services is managed by Azure IoT Hub.

- Real-time event processing and business logic execution are enabled by Azure Functions.

- Trend detection and anomaly tracking are aided by Stream Analytics and Time Series Insights.

Typical application scenarios:

- Find unusual temperatures in manufacturing machines and set off a real-time alert.

- Track energy consumption throughout several structures to help with cost optimization.

- Live sensor data will guide the control of warehouse robots.

If you are hiring dedicated .NET developers , they can help design these systems end-to-end, from the device firmware to the analytics pipeline.

Creating .NET adaptable IoT dashboards and APIs

ASP.NET Core shines here: you need a means to control the system and show the data once your smart devices are running.

Using ASP.NET Core and Blazor, you can create

- Responsive web dashboards for tracking IoT networks

- Administrative access portals for status tracking and device management

- Connect mobile apps or outside systems, APIs

Real-time dashboards benefit much from Blazor since it enables interactive UI changes via SignalR and WebSockets.

Staying within the .NET universe helps you avoid language switching and helps you to keep uniform code across backend, front-end, and IoT logic. Many companies decide to engage committed .NET developers for Industry 4.0 initiatives for this reason, as the efficiency shows itself quickly.

.NET for IoT: Challenges and Factors to Consider

No platform is flawless, and there are some crucial factors to take into account while picking.

- Device footprint: Although .NET has gotten better, very low-powered microcontrollers such as Arduino may still call for other languages.

- Some cloud functions, eg, cold start time unless pre-warmed, Azure Functions in C may have a little delay on the first run.

- Not all sensors have native .NET libraries; sometimes, bespoke development is needed.

That said, .NET is more than adequate for most industrial uses, particularly those involving Raspberry Pi, Windows IoT, or Linux-based edge devices.

An expert .NET development firm can assist you in negotiating these choices, choosing the appropriate tools for your project, devoid of over-engineering or under-delivering.

Conclusion: .NET Is Industry 4.0 Ready

.NET meets all three demands: flexible, scalable, and future-proof platforms for Industry 4.0.

From managing smart sensors to creating interactive dashboards and cloud analytics integration, .NET provides you with a whole toolbox for creating current IoT solutions. With native Azure connection, a robust developer ecosystem, and changing open-source libraries, it has never been easier to bridge the physical and digital worlds.

Now is the time to investigate what .NET can do for your smart factory or industrial application, whether you are starting from scratch or building upon an existing system. If you require professional direction, think about working with a .NET development firm that knows how to properly integrate IoT with business applications.

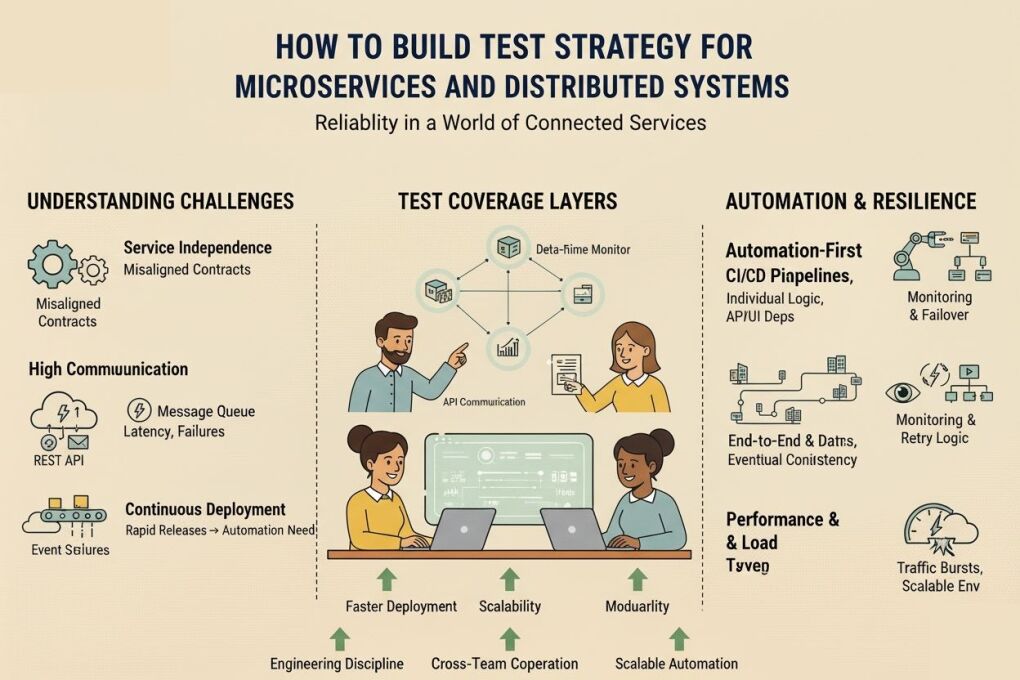

The software architectures of the modern world are changing from monolithic applications to microservices and distributed ecosystems. Such transformation enables the organizations to be able to deploy faster, scale independently, and develop with modularity across business functions. Nevertheless, testing has become complicated due to the fact that systems are no longer single entities. A variety of independently deployed and loosely coupled services are to interact perfectly with APIs, messaging layers, workflow events, and cloud infrastructure.

Quality assurance in microservices environments should have a customized methodology that will consider reliability, communication dependencies, service contracts, data synchronization, performance, and resilience to load in a real-world environment. The implementation of the correct test strategy requires the adoption of automation, observability, environment orchestration, and failure simulation so that there is consistent behavior of the system between distributed components.

Understanding Testing Challenges in Microservices Architecture

Service Independence and Shared Responsibility

Service Independence and Shared Responsibility

Each service has its own logic, data storage, deployments, and teams. While independence accelerates development, it also increases the risk of misaligned communication contracts. Services may function individually but fail when integrated. Teams need a robust quality framework that validates integration flows rather than only unit-level logic.

High Number of Communication Points

Microservices interact through REST APIs, gRPC, message queues, and event streams. These cause situations like network latencies, partial failures, payload mismatch, and idempotency errors. Realistic testing must replicate service discovery, distributed communication, and cloud orchestration behaviors.

Continuous deployment pressure

Rapid releases mean testing must keep pace with development velocity. This shifts traditional scripting and framework setup toward modern automation capabilities, including professional API automation testing services that support scalable test coverage across CI/CD pipelines.

Defining Test Coverage Layers for Distributed Systems

Unit and Component Testing

Unit and Component Testing

Individual service logic must be validated independently with mock dependencies and simulated data. Since microservices are owned by isolated teams, proper versioning and contract mapping are essential to avoid downstream issues.

Contract and integration testing

Testing communication formats ensures that producers and consumers remain compatible even when deployed separately. Schema-based contracts, consumer-driven pact testing, and automated validation workflows ensure stable interaction patterns, commonly supported through enterprise-grade microservices testing services for distributed validation.

End-to-End and Data Consistency Validation

End-to-end scenarios verify business outcomes across multiple services. In distributed ecosystems, data consistency is maintained using eventual consistency models, event sourcing, and distributed transactions. Teams must test real-world outcomes, not only internal interactions, using environmental replicas whenever possible.

Automation, Tooling, and Resilience Testing

Automation-First Testing Model

Automation-First Testing Model

Microservices require continuous validation across updates and dependency changes. Teams rely on automation frameworks designed for distributed orchestration and real-time execution pipelines. Organizations often collaborate with specialists in areas such as Selenium automation testing services when UI-level validation is required on top of service automation.

Where high-scale test engineering is essential, teams may seek dedicated expertise through partnerships that enable professional delivery, similar to engagement models where companies hire QA engineers for long-term automation maturity.

Monitoring, observability, and failover

Microservices interact through REST APIs, gRPC, message queues, and event streams. These cause situations like network latencies, partial failures, payload mismatches, and idempotency errors. Testers should analyze latency, retry logic, timeout strategies, and fallback mechanisms across services.

Performance and Load Evaluation

Testing must account for unpredictable traffic burst patterns and container lifecycle behaviors. Scalable cloud test environments, autoscaling metrics, and network-level resilience are essential for production-grade confidence.

Team Model, Collaboration, and Skill Structure

Testing Skill Distribution

Testing Skill Distribution

A microservices testing team requires multiple competencies, including scripting, API validation, performance testing, DevOps automation, and containerization. Many organizations strengthen internal capability through support models such as hiring test automation experts for specialized delivery or integration into DevOps testing pipelines.

For UI automation within the microservices ecosystem, teams may extend framework development using engineering expertise similar to collaboration models where companies hire Selenium developers to build reusable automation layers for modular components.

For globally distributed delivery, enterprises may engage remote professionals based on flexible operating models, as seen when they hire remote developers for continuous development and maintenance cycles.

Centralized Quality Governance

While microservices promote decentralization, testing governance should not be fragmented. Shared standards, documentation templates, versioning rules, and observability frameworks allow consistency across distributed systems.

Organizations that require wider scope testing, including integration and regression, rely on structured capability models similar to professional Selenium testing services for web automation management aligned to domain-specific test maturity levels.

Conclusion

A strong test strategy in microservices and distributed systems cannot be built using traditional QA planning. It requires multi-layer validation, contract-based testing, service-level observability, fault-tolerance testing, and automation to be built into DevOps pipelines. The most reliable model of testing is a hybrid approach of testing individual components and business processes.

The success of microservices testing relies on engineering discipline, cross-team cooperation, and scalable automation. Enterprise teams, which match testing structures to architectural objectives, deliver products faster, have more robust systems, and have less downtime. In scenarios where internal capabilities require additional engineering depth, organizations may collaborate with service-driven partners offering enterprise solutions such as Selenium testing services aligned to digital product delivery.

The metaverse is a paradigm-altering transformation in digital interaction, as virtual and real worlds intersect and form an immersive experience that interests global users. With more companies tapping into the virtual world, the need to have qualified developers who can create sophisticated 3D applications is skyrocketing every day. React Three.js has become the potent duo in designing compelling metaverse experiences with an outstanding level of performance and user satisfaction.

Why React Three.js is a Metaverse Dream

React Three Fiber (RTF) changes the historically difficult environment of creating 3D to one simpler, adding the familiar React component system to Three.js graphics programming. The revolutionary method enables the developer to develop virtual worlds with intuitive patterns using React and enjoy the power of 3D rendering. The declarative character of React Three Fiber eases the handling of complicated 3D scenes and keeps the codebases scalable.

Creating 3D experiences in the past was a complex and time-consuming process that needed years of experience in complex graphics programming in order to achieve simple geometric shapes. React Three Fiber breaks down all these barriers through the provision of a component-based solution that is natural in the hands of web developers. By recruiting React.js developers proficient in Three.js, the companies enter the world of professionals who are familiar with the contemporary web development methods and the latest 3D graphics programming.

The framework provides complex tools for complex managing 3D implementations such as advanced lighting systems, realistic shadow rendering, and smooth animations. These features allow the developers to develop visually impressive virtual environments that can compete with the native apps, in addition to being cross-platform compatible.

The Fundamentals of Getting Started: Building Blocks

How to configure the first metaverse project

The basis of successful metaverse projects is the development of a solid development environment. The way modern developers work is that they start with a React application with the help of such tools as Vite.js, which offer them a faster build process and better development experiences. The process of setting up follows the installation of Three.js libraries, setting up configuration scene parameters, camera localization, and setting up lighting systems.

The first learning curve is not as difficult as it might seem, especially to developers who have been involved in React development patterns. A few hours after installation, developers will be able to display the first 3D scenes with interactive objects and dynamic light. Creating a sound environment with adequate optimization of performance and scalability becomes possible with the help of professional ReactJS development services as soon as the project is started.

The development process resembles that of the conventional React app, but there are 3D objects, lights, and cameras instead of buttons and forms. This well-known framework will speed up the process of learning and decrease much time spent to become productive in metaverse development.

Generation of Worlds That Are Alive

3D scenes are not interactive, and thus, 3D may be a starting point, but real metaverse experiences must be interactive and responsive. The users are likely to click, drag, navigate, and feel as if they are in the virtual space. These interactive features turn passive watching experiences into active exploration and interaction.

Effective metaverse applications have many types of interaction associated with them, including a simple mouse click to elaborate gesture recognition. The developers can use gaze-based interactions, controller capabilities, and touch-responsive features to develop a friendly user interface. Studies show that the rate of active users on well-designed interactive applications is much higher than on static ones.

The trick of developing virtual interesting worlds is related to the realization of user behavioral patterns and the development of interaction that may be perceived as natural and responsive. Together with the user experience design principles, professional development teams are the best at designing these experiences with technical knowledge.

Performance Optimization: How to Make It Quick and Fluid

The Needed Performance Strategies

Optimization of performance is one of the essential aspects of the success of metaverse applications, where users require responsive user experiences on multiple devices and platforms. Three.js has advanced rendering methods, such as frustum culling, which restricts rendering to objects visible in the scene, and Level of Detail (LOD), which scales down the complexity of objects depending on the distance to the camera.

These optimization methods would save a lot of computation and also would not compromise the quality of the visualization. Sophisticated resource management techniques such as lazy loading, support of compressed textures, and speedy model loading systems are also present. A trustworthy React.js development company carries out these optimizations in a methodical way so as to guarantee steady performance in various hardware settings.

Memory management is especially significant where there are many users and interactive environments in a complex virtual setting. Good garbage collection, texture pooling, and management of the scene graph can ensure stable performance even in long usage sessions.

Cross-platform compatible solutions

The contemporary metaverse solutions should operate without any issues on a variety of devices, including expensive VR headsets, smartphones, and cheap laptops. This need to be accessible extends the reach of the audience and the rate of adoption by the users. The developers should apply responsive design, especially to the 3D settings.

Even 3D applications can be developed using the mobile-first strategy: begin with well-optimized models and effects on devices with lower capability, and proceed to increasingly impressive experiences on the capability-rich devices. The approach guarantees maximum compatibility with all devices and high performance on high-end products.

During development cycles, testing on many platforms and devices will be necessary. Teams of professionals in the field have device testing labs to ensure testing of the performance and functionality of the whole scope of intended hardware setups.

Social Integration and Sophisticated Functionalities

Development of Social and Collaborative Aspects

Social functionality takes the isolated 3D space to a whole new world of a community where people can interact with each other, collaborate, and establish relationships. React Three Fiber has the ability to enable real-time multiplayer systems, bespoke avatar systems, and social interaction elements to create meaningful connections in virtual space.

Social features implementation involves close attention to the networking protocols, user authentication systems, and the synchronization of the data in real-time. All these technical problems are easily overcome with the help of suitable architecture planning and a skilled development team that is familiar with 3D graphics as well as networking technology.

Effective social metaverse apps involve voice chat, text messaging, recognition of gestures, and collaboration tools through which people can collaborate on a project or just socialize in virtual worlds. Such characteristics contribute a lot to the user retention and engagement rates.

External connectivity and API integration

Metaverse applications are not normally standalone, and they need to be connected to outside services, databases, and business systems. Such associations make possible such capabilities as user authentication, content management, e-commerce capabilities, and real-time data visualization in 3D spaces.

Services of professional ReactJS web development companies are the best place to get help with an API integration that is without a problem and ensures the security of the application and its performance. The process of integration is a complex activity that presupposes the thoughtful planning of data transferring, caching, and error management to provide a smooth connection between virtual environments and the external systems.

Contemporary metaverse applications tend to be characterized by blockchain-based technology, non-fungible token (NFT) marketplaces, cryptocurrency payments, and decentralized storage. Such integrations involve expertise in Web3 technologies as well as in the usual web development processes.

Virtual Reality Support and WebXR Support protocols.

The WebXR integration of React Three Fiber allows developers to develop immersive VR experiences that work natively in web browsers and do not require any independent native applications. This feature makes it much easier to enter the system as a user and offers complete support for VR on all available devices.

The framework is compatible with multiple VR headsets and input devices, which introduces virtual experiences to the general audience with no need to install particular hardware and software. The support of WebXR is still growing as browser developers and hardware companies implement standard solutions.

Metaverse applications that are powered by VR provide a new level of immersion where users can experience being physically in virtual places. The technology is mainly useful in training, virtual conferences, and entertainment settings that are enhanced by the use of space.

Search Engine Optimization and Accessibility

SEO of the 3D Content

When developing metaverse applications intended to be discoverable, one should consider best SEO practices and pay special attention to designing web-based experiences. The developers should adopt server-side rendering (SSR) technologies that will help search engines to index 3D content appropriately and learn about the structure of applications.

As a result, the correct use of meta tags, markup of structured data, and semantic URLs becomes important to search engine exposure. These are some of the technical SEO features that can assist search engines to know and classify metaverse content, enhancing organic discovery and acquisition of users.

Optimization techniques of 3D applications are not the same as those of regular web pages, and they will need special techniques to describe a virtual world, interactive features, and user experience in such a way that search engines can understand and index them well.

Constructing Inclusive Virtual Experiences

The issue of accessibility in a 3D environment is quite different and demands consideration of design and implementation approaches. VR headsets cannot be used by every user, and high-end graphics hardware is not available to all, so alternative interaction schemes and progressive enhancement are vital to inclusive design.

Effective accessibility practices are the provision of means of navigating with a keyboard, compatibility with screen readers, provision of alternative text to 3D objects, and adaptable performance settings, which meet different hardware capabilities. These characteristics make the metaverse experiences compatible with the various levels of ability and technical limits.

The focus on accessibility is given by professional development teams already at the start of the project when the universal design principles are used, which are beneficial to all users and comply with the requirements of users with disabilities.

Conclusion: Creation of the Future of Digital Contact

React Three.js is a breakthrough technology that allows creating metaverse experiences on the web and has the openness of web development and the capabilities of complex 3D graphics. The component-based architecture, optimizations of its performance, and a large community are the characteristics that make the framework an optimal starting point for ambitious virtual reality projects.

The metaverse environment is changing fast, and emerging technologies, standards, and expectations of users keep surfacing. Collaborating with qualified ReactJS development services will be even more beneficial to the business that is interested in making a significant presence in the virtual world without undergoing the technicalities as well as market dynamics.

Technical skills are not enough for the development of the metaverse. The principles of user experience design, performance optimization, cross-platform compatibility, and accessibility standards may be of great help in designing virtual worlds that will interest the user and bring value over time.

The combination of the known React development patterns with the potent 3D assets of Three.js offers developers an array of tools previously unseen to develop the future of digital experiences. With the merging of the virtual and the physical world, the possibilities of innovation and creativity in developing the metaverse are virtually unlimited.

The future is with developers and companies that accept these new technologies and focus on the needs of users, accessibility, and performance. It is React Three.js that provides an ideal basis on which to construct that future, experience by experience.

With testing tools appearing and disappearing as fast as fashion trends, the technological sector is quickly changing. Selenium, though? In technological years, this veteran automation tool has lingered for what feels like an eternity. As we enter 2025, many people are wondering if Selenium is still worth the investment or if it will end up in the digital landfill.

Let's get past the noise and discover Selenium's true status in 2025.

Selenium's Staying Power: Why It's Not Going Anywhere

First of all, selenium usage is not likely to stop anytime soon. According to recent market reports, the automation testing market is blowing up - expected to hit a whopping $52.7 billion by 2027. And guess what? Selenium is still grabbing a massive chunk of that pie.

Why? This is primarily due to its effectiveness. The underlying technology of Selenium is incredibly robust. Furthermore, numerous companies employ senior engineers who have transitioned into managerial positions and possess extensive knowledge of Selenium. When they need to set up testing, they naturally lean toward what they already know and trust.

Think about it—even a decade after Chrome and Firefox took over, companies were still supporting Internet Explorer 6 because of customer requirements. Particularly if tech is intimately integrated into corporate operations, it does not just vanish overnight.

What's New with Selenium in 2025?

Since its release, Selenium 4 has revolutionized the industry. It's packed with improvements that have kept it relevant in 2025:

- Better Docker support

- Improved windows and tab management

- Native Chromium driver for Microsoft Edge

- Relative locators that make finding elements way easier

- Better formatted commands for Selenium Grid

These upgrades aren't simply small changes; they're big enhancements that fix a lot of the problems developers encountered with prior versions. Selenium developers now have access to a considerably more powerful and versatile tool than they had only a few years ago.

The AI Factor: How Selenium Is Embracing Smart Testing

One of the coolest trends in 2025 is how services for Selenium automation testing are incorporating AI and machine learning. This isn't just buzzword bingo—it's making a real difference in how testing works.

Self-healing test automation is the perfect example. We've all been there: you write a perfect Selenium script, and then some developer changes an element ID, and suddenly your whole test suite breaks. Super annoying, right?

Now, AI-powered Selenium testing can actually identify alternative elements on the page when the expected one isn't found. The tests literally fix themselves at runtime. This advantage is huge for reducing maintenance headaches, which has always been one of Selenium's weak spots.

Companies that offer selenium testing services are increasingly adding these AI capabilities to their toolkits, making tests more reliable and requiring less babysitting.

Cloud and Distributed Testing: Testing at a Whole New Scale

There will be a big change in how Selenium is used in distributed cloud systems in 2025. As the number of browsers, devices, and operating systems we need to test on grows, the old way of running Selenium Grid on local PCs doesn't work anymore.

Cloud-based Selenium testing is now standard, and distributed cloud designs have fixed the problems with latency that used to make remote testing hard. When you hire remote Selenium developers today, they're likely setting up tests that run simultaneously across multiple geographic locations, giving you faster results and better coverage.

This distributed approach means companies can run thousands of tests in parallel, cutting down testing time from hours to minutes. This significantly transforms CI/CD pipelines, where speed is crucial.

Selenium vs. Newer Competitors: The Testing Showdown

It's important to acknowledge that tools such as Playwright are rapidly gaining traction. Microsoft's backing has given Playwright some impressive capabilities, especially for handling single-page applications and flaky tests.

But here's the thing: Selenium isn't going away. The market is not characterized by a dominant player. By 2025, we're seeing a hybrid approach where companies use

- Selenium for their established, stable test suites

- Newer tools like Playwright are designed for specific use cases where they excel

- AI-powered tools to complement both approaches

When you hire Selenium developers in 2025, you're often getting professionals who understand this ecosystem approach. They know when to use Selenium and when another tool might be better for a specific testing challenge.

The Job Market: Skills That Pay the Bills

If you're wondering about career prospects, the news is positive. Selenium developer jobs are still in high demand, with average annual salaries in the US ranging from $62,509 to $100,971. The automation testing market growth means opportunities for Selenium experts aren't drying up anytime soon.

What has changed is the skill set that employers look for when they hire remote Selenium developers. It's no longer enough to just know the basics. In 2025, the most in-demand professionals skilled in Selenium possess the following abilities:

- AI-augmented Selenium testing

- Cloud-based and distributed test architectures

- Integration with DevOps and CI/CD pipelines

- Performance optimization for large test suites

- Security testing automation

These specialized skills ensure your resume matches what recruiters are searching for in 2025.

The Cost Factor: Why Selenium's Price Tag Matters

One giant reason Selenium remains relevant in 2025? It's still free. Under the Apache 2.0 open-source license, all the core components—WebDriver, IDE, and Grid—cost exactly zero dollars.

This is especially important in a commercial environment where every budget is looked at closely. There are ancillary expenses like infrastructure, training, and maintenance, but Selenium is a great deal compared to other commercial options.

Because of this cost advantage, selenium automation testing services may provide low prices and yet get good results. This ease of access is a big selling feature, especially for small firms and startups.

Real Talk: Selenium's Challenges in 2025

Let's be honest—Selenium isn't perfect. Some challenges persist even in 2025:

- Test flakiness can still be an issue, especially with complex UIs

- Setup and configuration have a learning curve

- Maintenance requires ongoing attention

- Performance can lag behind newer tools in some scenarios

The community knows about these problems and is working to fix them, nevertheless. When you employ Selenium engineers who actually know what they're doing, they provide solutions and best practices that help avoid these problems.

Plus, the massive community support means that when problems do arise, solutions are usually just a Google search away. Try finding that level of support for a brand-new testing tool!

The Verdict: Is Selenium Worth It in 2025?

So what's the bottom line? Is Selenium still worth investing in for 2025 and beyond?

For most companies, the answer is yes, with some caveats. Selenium remains a solid choice if:

- You need a battle-tested solution with massive community support

- Cost is a big deal in your decision-making

- Your team already knows Selenium inside and out

- You're testing traditional web applications

- You want flexibility across different programming languages

In 2025, the most intelligent strategy is to strategically incorporate Selenium into a larger testing toolkit rather than relying solely on it. When you hire remote Selenium developers or partner with Selenium testing services, look for those who get this nuanced approach.

Looking Ahead: Selenium Beyond 2025

What's next for Selenium beyond 2025? While nobody can predict tech futures with 100% accuracy, a few trends seem pretty clear:

- Selenium will keep evolving, with AI integration becoming deeper and more seamless

- The ecosystem of complementary tools and frameworks will grow

- Cloud-based and distributed testing will become even more sophisticated

- The community will continue driving innovations that keep Selenium relevant

Industry experts are saying Selenium's market share will probably decrease but stabilize around 50% over the next five years, eventually finding a long-term place in the 5-20% range over the next decade. That's not Selenium dying—it's just settling into a specialized tool with a dedicated user base.

Wrapping Up

Though it still has great worth, Selenium may not be quite as well-known in 2025 as it once was. Like COBOL programmers who still find employment decades after that language peaked, Selenium abilities remain useful even as the environment changes. If you want to be open to new ideas, it's best to have Selenium in your testing toolset. Selenium still deserves a place at the table, whether you want to employ engineers, work with Selenium testing services, or build your own testing capabilities in-house.

In 2025, testing isn't about finding the one best tool; it's about putting together the proper combination of technologies to fulfill your objectives.

The first time I walked into Janet's boutique, inventory sheets were scattered across her desk, three different POS systems were running simultaneously, and she looked like she hadn't slept in days. "I spend more time managing systems than managing my business," she confessed. Six months later, after implementing a custom .NET Core retail management solution, Janet's store was running like clockwork—inventory accurate to the item, staff focused on customers instead of paperwork, and Janet was finally taking weekends off.

This transformation isn't unique. I've seen it happen repeatedly across retail businesses of all sizes. Let me share what makes these systems work and how .NET Core has become my go-to framework for retail solutions that actually deliver results.

Why .NET Core Changes the Game for Retailers

Think about what retailers really need: reliability during holiday rushes, security for customer data, and systems that talk to each other. This is where .NET Core truly shines.

Working with an ASP.NET development company gives you access to cross-platform capabilities that traditional frameworks can't match. One sporting goods chain I worked with runs their warehouse operations on Linux servers while their in-store systems use Windows—.NET Core handles both environments seamlessly.

The performance benefits are measurable, too. When Black Friday hits and transaction volumes spike 500%, a well-architected system keeps processing sales without breaking a sweat. I've seen checkout times decrease by 40% after migration to optimized .NET Core solutions.

The Technical Edge That Matters

"But what makes it better than what I'm using now?" That's what Alex, a hardware store owner, asked me when we first discussed modernizing his systems.

The answer lies in the architecture. Modern ASP.NET Core development employs:

- Microservices that allow individual components to scale independently

- Robust caching mechanisms that reduce database load during peak times

- Containerization for consistent deployment across environments

- Built-in dependency injection for more maintainable, testable code

These aren't just technical buzzwords—they translate directly to business benefits. When Alex's store launched a major promotion that went viral, his new system handled the unexpected traffic surge without missing a beat.

The Building Blocks of Retail Success

Inventory Management: Where Most Systems Fail

Remember Janet from earlier? Her biggest pain point was inventory management, and she's not alone. Studies show that retailers typically have only 63% inventory accuracy without proper systems.

Through ASP.NET web development, we built an inventory module that delivered:

- Real-time visibility across her three locations